Enlarge / An AI-generated picture of robots wanting inside a synthetic mind.

Steady Diffusion

OpenAI has revealed a brand new analysis paper that showcases its GPT-4 language mannequin’s functionality of writing explanations for the conduct of neurons in its older GPT-2 mannequin. That is seen pretty much as good progress within the area of “interpretability,” which explains how neural networks arrive at their outputs. The paper explains that the performance and capabilities of Massive Language Fashions are nonetheless not totally understood, regardless of being extensively deployed throughout the tech world. Through the use of the GPT-4 mannequin to generate pure language explanations for the conduct of neurons in GPT-2, OpenAI intends to realize interpretability with out relying solely on handbook human inspection. The black field nature of the neural networks is, nevertheless, a big impediment to this sort of interpretability.

OpenAI’s method makes use of GPT-4 to generate human-readable explanations for the perform or function of particular neurons and a spotlight heads inside the mannequin. It additionally creates a quantifiable rating that measures the language mannequin’s capability to compress and reconstruct neuron activations utilizing pure language. Nevertheless, in testing, OpenAI discovered that each GPT-4 and a human contractor “scored poorly in absolute phrases,” indicating that decoding neurons continues to be a tough activity.

OpenAI researchers recognized polysemantic neurons and “alien options” as a few of the limitations of their technique. Different limitations embody being compute-intensive and solely providing quick pure language explanations. Nevertheless, researchers stay optimistic that the framework for machine-mediated interpretability, and the quantifiable technique of measuring enhancements, will result in actionable insights into the workings of neural networks.

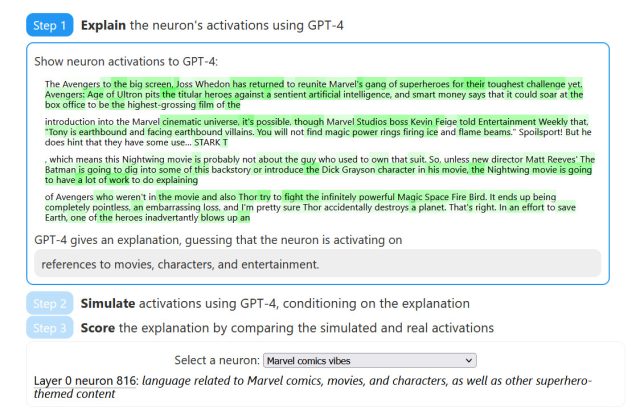

To exhibit its progress, OpenAI has revealed its analysis paper on an interactive web site that includes real-world examples. The web site breaks down the strategy in three levels and gives a dataset of GPT-2 XL neurons and explanations on GitHub in addition to the code for Automated Interpretability.

Total, with interpretability being a key consider reaching AI alignment, it’s important for the researchers to work in the direction of higher understanding neural networks’ capability to make selections. In conclusion, OpenAI’s method has generated some thrilling potential, even when there are nonetheless limitations to be overcome sooner or later.

Commercial

Enlarge / The paper’s web site consists of diagrams that present GPT-4 guessing which parts of a textual content have been generated by a sure neuron in a neural community.

Originally posted 2023-05-12 19:34:56.